Day 5. Go beyond multiple choice. I’ve been reading a lot recently about the pros and cons of multiple choice (selected response) and constructed response questions. If you’re a regular reader of this blog, you’ll realise that I am not a great fan of multiple choice questions. I’ve already given some of my reasons, but I’ll attempt to summarise here.

Day 5. Go beyond multiple choice. I’ve been reading a lot recently about the pros and cons of multiple choice (selected response) and constructed response questions. If you’re a regular reader of this blog, you’ll realise that I am not a great fan of multiple choice questions. I’ve already given some of my reasons, but I’ll attempt to summarise here.

Some authors point out that there is generally a good correlation between students’ scores on multiple choice and constructed response questions. This may be true, but even if it is, my biggest objection to an over-reliance on multiple choice questions is the message that this gives to our students and wider society. The title of a paper written by Veloski et al. back in 1999 is that ‘Patients don’t present with five options’. This is referring to the fact that medical doctors need to make important decisions about their patients without being presented with options to chose from. The same is true in other disciplines – in brief, the multiple choice route is not exactly authentic! I also think (most but not all of the papers I have read on the subject agree) that multiple choice questions lend themselves more to knowledge-based, lower order learning outcomes.

I am also conscious that it is possible to guess the answers to multiple-choice questions (assuming that you’re not using confidence-based marking or similar – see the next post for more on this) and so, as an academic, I can’t be entirely sure whether borderline students have got the result that they have by good skill or good judgement. It has been pointed out that it is unlikely that a student would score well on a multiple choice test completely by guesswork, but that’s not the point – for a borderline student it could make quite a difference.

In addition to problems associated with pure guesswork, there is the fact that some students cultivate test-taking strategies e.g. eliminating the responses that they know are wrong then choosing from (say) just two options rather than six. At one level there’s nothing wrong with doing this; it’s an intelligent way of coping with the matter in hand and when I was a tutor it is something I used to teach my students to do. However, some students are more ‘test wise’ than others and it means that you are assessing test-wiseness as well as the subject matter of the test. Some students take training courses in ‘test-wiseness’ so you may even be assessing affluence; students’ ability to pay. Furthermore, especially if several of the options are similar, more able students may read more into the question than was intended, and so be disadvantaged.

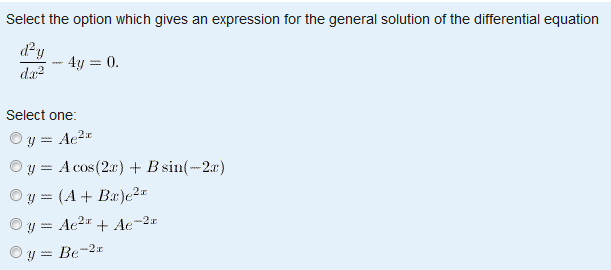

For some questions, test-wiseness extends to working backwards from multiple choice options to see which one ‘fits’, rather than answering the question as asked. If a multiple choice question of this type has been set deliberately it may be OK, but care is needed. In the example below, students do not need to actually solve the ordinary differential equation in order to arrive at the answer, but rather to differentiate each of the options to see which one solves the equation. Being able to do this is a useful precursor to learning how to solve ordinary differential equations, so this may be OK – but if you think you are assessing students’ ability to actually solve the equation per se, you’re mistaken!

Even when it is not possible to work backwards from the options, the presence of options limits choice. So, if you do a calculation and your answer is not given, it is reasonable to assume your answer was wrong. This gives you a fairly large hint! However it doesn’t necessarily make multiple choice questions easier, partly because question authors sometimes assume that they have offered the only likely incorrect options and so if your answer isn’t one of them, you may be left having to guess from amongst the other options – and so you do not receive feedback that is appropriate for your actual misunderstanding. A number of authors have reported that when constructed response versions of multiple choice questions are offered, students are found to give many responses (and some of these rather frequently) that were not offered as distractors in the multiple choice version.

A couple of papers about the ‘testing effect’ (the way in which taking a test can improve later recall) report that constructed response tests are more effective than multiple-choice ones, perhaps because people remember the incorrect distractors rather than the correct answer. This finding may not be universally agreed (and feedback can act to correct for the problem) but it is not my intention to give a balanced view in this post, more to explain some of the problems and perceived problems with multiple-choice questions. I’ll redress the balance a little tomorrow.