After the flurry of technical activity earlier in the project May has been a quieter month that we’ve spent mainly arranging the evaluation work that starts in June, and looking at some of the early feedback from the on-going user survey.

User evaluation work

Any research with students at the OU has to be approved by an ‘ethics’ committee, the Student Research Project Panel. So we complete a fairly lengthly template that outlines the research we plan to do, who we will involve, how we will go about the research and what Data Protection processes we have in place. That goes off to the panel for assessment and all being well you get approval for your evaluation activity.

At the OU, apart from dealing with the ethical basis of the research the process also acts to regularise the contacts with students so they aren’t deluged with requests and emails. As a distance learning institution a lot of contact with students is by email so it’s important that students can control the amount of material that is sent to them as the pace of study can be intensive. So students can opt-in to being available for research of this type.

Once the project is approved then we get sent a list of contact details for the students we are allowed to contact to take part in the evaluation. For RISE we’ve had quite a good response and have been able to arrange the first few one to one interviews starting tomorrow. We’ve also had people saying that they are interested in checking out MyRecommendations online and will complete the feedback.

Feedback so far

When we setup the RISE interface we added a feedback link to a survey using SurveyMonkey This has allowed us to collected some user responses more immediately.

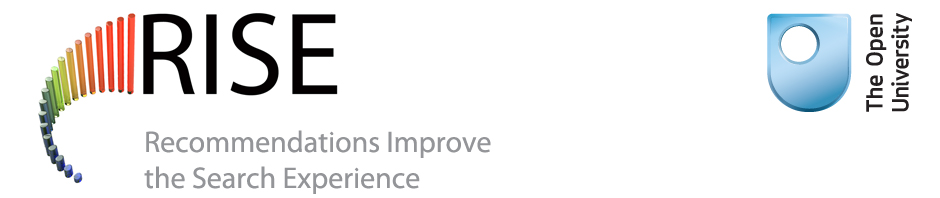

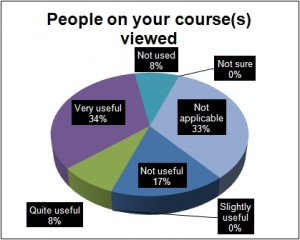

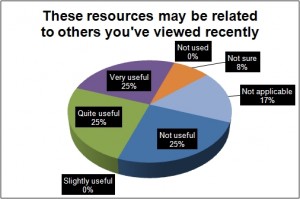

So we’ve asked questions about each of the different types of recommendations that we are providing to get people to tell us how useful they are.

For course recommendations i.e. ‘People on your course viewed’ more than 40% saw them as Very or Quite useful. It should be noted that if you aren’t on a course you don’t get any course recommendations so that should account for the 33% who said ‘Not applicable’. Course recommendations are based largely on the EZProxy logfiles so have the largest amount of data to draw on.

The second type of recommendation, which tries to relate articles you’ve viewed with similar articles by postulating that there is a relationship between articles that a user views sequentually, shows that 50% thought them to be Very or Quite Useful, but with a larger number seeing them as not useful. There does seem to be a ‘marmite’ effect where recommendations are either relevant or not. That could be down to the quantity of recommendations data as RISE currently relies on data collected since the interface went live.

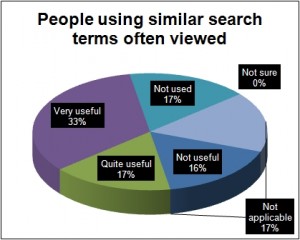

The third type of recommendation relates to the search terms that are used and the articles viewed. Agian 50% saw these are Very or Quite useful, but a smaller percentage saw them as Not useful. Again these recommendations are being powered by search terms entered into the RISE interface as we don’t have the search terms used for the EZProxy data.

The third type of recommendation relates to the search terms that are used and the articles viewed. Agian 50% saw these are Very or Quite useful, but a smaller percentage saw them as Not useful. Again these recommendations are being powered by search terms entered into the RISE interface as we don’t have the search terms used for the EZProxy data.

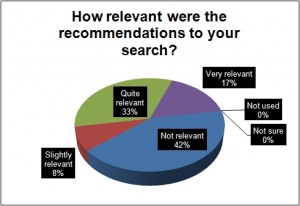

The final question we asked was to try to understand a bit more about the relevance and quality of the results. Here there was a much more definite Not Relevant response at 42% but 50% saw the results are Very or Quite relevant. So again a bit of a ‘marmite’ response that bears more detailed investigation to undestand why.

EDINA OpenURL data openly released

A few days ago came the great news that EDINA have released their OpenURL data http://openurl.ac.uk/doc/data/data.html So we’ve been having a look at the data to see how it could help us with RISE recommendations. The size of the dataset at nearly 300,000 rows is larger than we have with RISE and although there aren’t any search terms included we think there are ways that we can use it with RISE so have scheduled some time to setup a RISE parser to ingest the data and test it later this month. A great example to us all though and it will be interesting to see what can be done with the data.